***Updated February 10th 2010****

Title of Primary Project Output:

The Bibliosight desktop application will allow users to specify an approriate query and retrieve bibliographic data as XML from the Web of Science using the recently released (free) WoS API (WS Lite) and convert into a suitable format for repository ingest via SWORD*

*Due to current limitations of WS Lite, the functionality to convert XML output has not been implemented – see this post on Repository News for more details.

Screenshots or diagram of prototype:

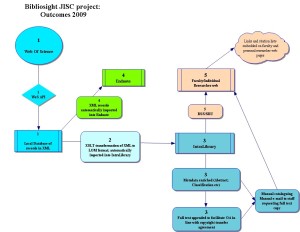

Diagram of how returned XML will be mapped onto LOM XML for ingest to intraLibrary (click on the image for full size):

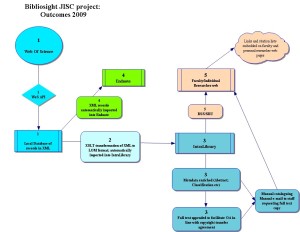

The full bibliosight process (click on the image for full size):

Description of Prototype:

The prototype is a desktop application written in Java that is linked to Thomson Reuters’ WS Lite, an API that allows the Web of Science to be queried by the following fields:

| Field | Searchable code |

| Address (including 5 field below) | AD |

| 1. Street | SA |

| 2. City | CI |

| 3. Province/State | PS |

| 4. Zip/postal code | ZP |

| 5. Country | CU |

| Author | AU |

| Conference (including title, location, data, and sponsor) | CF |

| Group Author | GP |

| Organization | OG |

| Sub-organization | SG |

| Source Publication (journal, book or conference) | SO |

| Title | TI |

| Topic | TS |

Queries may also be specified by date* and the service will support the AND, OR, NOT, and SAME Boolean operators.

*The date on which a record was added to WoS rather than the date of publication. In most cases the year will be the same but there will certainly be some cases where an article published in one year will not have been added to WoS until the following year.

An overview of the application is as follows:

Query options: Query – Allows the user to specify the fields to query in the form Code=(query parameter) and the service does support wild-cards e.g. AD=(leeds met* univ*)

Query options: Date – Allows the user to specify either the date range (inclusive) or retrieve recent updates within the last week/two weeks/four weeks

Query options: Database: DatabaseID – Currently WOS only; in order to ensure the client is as flexible as possible this field is included to accommodate additional Database IDs and it may be possible to plug-in additional databases in the future, for example.

Query options: Database: Editions – These checkboxes reflect the Citation Databases filter within WoS:

- AHCI – Arts & Humanities Citation Index (1975-present)

- ISTP – Conference Proceedings Citation Index- Science (1990-present)*

- SCI – Science Citation Index (1970-present)

- SSCI – Social Sciences Citation Index (1970-present)

*ISTP reflects code currently used by API – it is not clear why it doesn’t correspond with term now used in WoS which is CPCI-S – Conference Proceedings Citation Index- Science (1990-present)

Retrieve Options: Start Record – Allows user to specify start record to return from all results

Retrieve Options: Maximum records to retrieve – Allows user to specify maximum records to retrieve between 1 and 100 (N.B. The API is currently restricted to a maximum of 100 records though it can be queried multiple times.)

Retrieve Options: Sort by (Date) (Ascending/Descending) – Allows user to sort records (currently by date only) ascending or descending in date order.

Proxy settings: This is purely for local network setup at Leeds Met and has nothing to do with WoS but will be necessary for users that are behind a proxy server.

View results: View results of current query (as XML)

Save results: Save results of current query

Perform search request: Perform the specified query

Link to working prototype:

There are several issues with distributing a working prototype in that it has a number of dependencies, some of which are specific to the WS Lite service and it is our view that it is less confusing to release the code only, which is available from http://code.google.com/p/bibliosight/

A screen-cast of the working prototype is available here.

Please note that you will require an appropriate subscription to ISI Web of Knowledge; the service requires an authorised IP address and you will also need to register for Thomson Reuters Web of Science® web services programming interface (WS Lite) by agreeing to the Terms & Conditions at http://science.thomsonreuters.com/info/terms-ws/ and completing a registration form – if you have any problems you should contact your Thomson Reuters account manager.

Link to end user documentation:

End user documentation: https://bibliosightnews.wordpress.com/end-user-documentation/

About the project: https://bibliosightnews.wordpress.com/about/

For use cases see: https://bibliosightnews.wordpress.com/use-cases/

Link to code repository or API:

The code is available from http://code.google.com/p/bibliosight/

Link to technical documentation:

Technical documentation for WS Lite is available from Thomson Reuters and you should address enquiries to your Thomson Reuters account manager.

The code available from http://code.google.com/p/bibliosight/ is fully commented.

Date prototype was launched:

February 9th 2010 (This is code only, not a distribution of a working prototype – there is some very basic info in there on what you’d need to get it running.)

A screen-cast of the working prototype is available here.

Project Team Names, Emails and Organisations:

Wendy Luker (Leeds Metropolitan University) w.luker@leedsmet.ac.uk

Arthur Sargeant (Leeds Metropolitan University) a.sargeant@leedsmet.ac.uk

Peter Douglas (Intrallact Ltd) p.douglas@intrallect.com

Michael Taylor (Leeds Metropolitan University) m.taylor@leedsmet.ac.uk

Nick Sheppard (Leeds Metropolitan University) n.e.sheppard@leedsmet.ac.uk

Babita Bhogal (Leeds Metropolitan University) b.bhogal@leedsmet.ac.uk

Sue Rooke (Leeds Metropolitan University) s.rooke@leedsmet.ac.uk

Project Website:

https://bibliosightnews.wordpress.com/

PIMS entry:

https://pims.jisc.ac.uk/projects/view/1389

Table of Content for Project Posts:

- First Post

- Quickstep into rapid innovation project management

- Project meeting number 1: Draft Agenda

- Project meeting – minutes

- eurocris

- JournalTOCs

- SWOT analysis – a digital experiment

- Generating use-cases

- No one said it would be easy

- SWOT update

- Project meeting number 2: Draft Agenda

- Use case meeting

- 20 second pitch at #jiscri

- Project meeting – minutes

- Small but important win – we have XML!

- Research Excellence Framework: Second consultation on the assessment and funding of research

- JISC Rapid Innovation event at City of Manchester stadium

- Quick reminder(s)

- Just round the next corner…

- Project meeting number 3: Draft agenda

- More on ResearcherID

- User participation

- Project meeting – minutes

- Quick sketch

- Visit from Thomson Reuters

- Project meeting number 4: Draft agenda

- Project meeting – minutes

- Thinking out loud…

- Quick sketch #2

- Mapping fields from WoS API => LOM

- Project meeting number 4: Draft agenda

- The role of standards in Bibliosight

- Project meeting – minutes

- Web Services Lite

- JournalTOCsAPI workshop

- Steady as she goes – Bibliosight back on course